Upgrade RAC Grid and Database from 11.2.0.4 to 12.1.0.2 :-

Main steps :

Grid :-

- Check all services are up and running from 11gR2 GRID_HOME

- Perform backup of OCR, voting disk and Database.

- Create new directory for installing 12C software on both RAC nodes.

- Run “runcluvfy.sh” to verify errors .

- Install and upgrade GRID from 11gR2 to 12cR1

- Verify upgrade version

Database :-

- Backup the database before the upgrade

- Database upgrade Pre-check

- Creating Stage for 12c database software

- Creating directory for 12c oracle home

- Check the pre upgrade status.

- Unzip 12c database software in stage

- Install the 12.1.0.2 using the software only installation

- Run Preupgrade.sql script in 11.2.0.4 existing database from newly installed 12c home.

- Run the DBUA to start the database upgrade.

- Database post upgrade check.

- Check Database version.

Environment variables for 11g database :-

GRID :

grid()

{

ORACLE_BASE=/u01/app/oracle; export ORACLE_BASE

ORACLE_HOME=/u01/app/11.2.0/grid; export ORACLE_HOME

export ORACLE_SID=+ASM1

ORACLE_TERM=xterm; export ORACLE_TERM

BASE_PATH=/usr/sbin:$PATH; export BASE_PATH

SQLPATH=/u01/app/oracle/scripts/sql:/u01/app/11.2.0/grid/rdbms/admin:/u01/app/oracle/product/11.2.0/dbhome_1/rdbms/admin; export SQLPATH

PATH=$ORACLE_HOME/bin:$BASE_PATH; export PATH

LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib; export LD_LIBRARY_PATH

CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib; export CLASSPATH

}

DATABASE :

11g()

{

ORACLE_HOME=/u01/app/oracle/product/11.2.0/dbhome_1

export ORACLE_HOME

ORACLE_BASE=/u01/app/oracle

export ORACLE_BASE

ORACLE_SID=orcl11g1

export ORACLE_SID

LD_LIBRARY_PATH=$ORACLE_HOME/lib:/usr/lib:.

export LD_LIBRARY_PATH

LIBPATH=$ORACLE_HOME/lib32:$ORACLE_HOME/lib:/usr/lib:/lib

export LIBPATH

TNS_ADMIN=${ORACLE_HOME}/network/admin

export TNS_ADMIN

PATH=$ORACLE_HOME/bin:$PATH:.

export PATH

}

Upgrade GRID Infrastructure Software 12c :-

Check GRID Infrastructure software version and Clusterware status:

[oracle@racpb1 ~]$ grid [oracle@racpb1 ~]$ crsctl query crs activeversion Oracle Clusterware active version on the cluster is [11.2.0.4.0] [oracle@racpb1 ~]$ crsctl check crs CRS-4638: Oracle High Availability Services is online CRS-4537: Cluster Ready Services is online CRS-4529: Cluster Synchronization Services is online CRS-4533: Event Manager is online

Verify all services are up and running from 11gR2 GRID Home :

[oracle@racpb1 ~]$ crsctl stat res -t -------------------------------------------------------------------------------- NAME TARGET STATE SERVER STATE_DETAILS -------------------------------------------------------------------------------- Local Resources -------------------------------------------------------------------------------- ora.DATA.dg ONLINE ONLINE racpb1 ONLINE ONLINE racpb2 ora.LISTENER.lsnr ONLINE ONLINE racpb1 ONLINE ONLINE racpb2 ora.asm ONLINE ONLINE racpb1 Started ONLINE ONLINE racpb2 Started ora.gsd OFFLINE OFFLINE racpb1 OFFLINE OFFLINE racpb2 ora.net1.network ONLINE ONLINE racpb1 ONLINE ONLINE racpb2 ora.ons ONLINE ONLINE racpb1 ONLINE ONLINE racpb2 ora.registry.acfs ONLINE ONLINE racpb1 ONLINE ONLINE racpb2 -------------------------------------------------------------------------------- Cluster Resources -------------------------------------------------------------------------------- ora.LISTENER_SCAN1.lsnr 1 ONLINE ONLINE racpb2 ora.LISTENER_SCAN2.lsnr 1 ONLINE ONLINE racpb1 ora.LISTENER_SCAN3.lsnr 1 ONLINE ONLINE racpb1 ora.cvu 1 ONLINE ONLINE racpb1 ora.oc4j 1 ONLINE ONLINE racpb1 ora.orcl11g.db 1 ONLINE ONLINE racpb1 Open 2 ONLINE ONLINE racpb2 Open ora.racpb1.vip 1 ONLINE ONLINE racpb1 ora.racpb2.vip 1 ONLINE ONLINE racpb2 ora.scan1.vip 1 ONLINE ONLINE racpb2 ora.scan2.vip 1 ONLINE ONLINE racpb1 ora.scan3.vip 1 ONLINE ONLINE racpb1

Check Database status and configuration :

oracle@racpb1 ~]$ srvctl status database -d orcl11g Instance orcl11g1 is running on node racpb1 Instance orcl11g2 is running on node racpb2 [oracle@racpb1 ~]$ srvctl config database -d orcl11g Database unique name: orcl11g Database name: orcl11g Oracle home: /u01/app/oracle/product/11.2.0/dbhome_1 Oracle user: oracle Spfile: +DATA/orcl11g/spfileorcl11g.ora Domain: localdomain.com Start options: open Stop options: immediate Database role: PRIMARY Management policy: AUTOMATIC Server pools: orcl11g Database instances: orcl11g1,orcl11g2 Disk Groups: DATA Mount point paths: Services: Type: RAC Database is administrator managed

Perform local backup of OCR :

[root@racpb1 ~]$ mkdir -p /u01/ocrbkp [root@racpb1 ~]# cd /u01/app/11.2.0/grid/bin/ [root@racpb1 bin]# ./ocrconfig -export /u01/ocrbkp/ocrfile

Move the 12c GRID Software to the server and unzip the software :

[oracle@racpb1 12102_64bit]$ unzip -d /u01/ linuxamd64_12102_grid_1of2.zip Archive: linuxamd64_12102_grid_1of2.zip creating: /u01/grid/ . . [oracle@racpb1 12102_64bit]$ unzip -d /u01/ linuxamd64_12102_grid_2of2.zip Archive: linuxamd64_12102_grid_2of2.zip creating: /u01/grid/stage/Components/oracle.has.crs/ . .

Run cluvfy utility to pre-check any errors :

Execute runcluvfy.sh from 12cR1 software location,

[oracle@racpb1 grid]$ ./runcluvfy.sh stage -pre crsinst -upgrade -rolling -src_crshome /u01/app/11.2.0/grid -dest_crshome /u01/zpp/12.1.0/grid -dest_version 12.1.0.2.0 -verbose

Make sure the cluvfy executed successfully. If any error, please take action before going to GRID 12cR1 upgrade.The cluvfy log is attached here.

Stop the running 11g database :

[oracle@racpb1 ~]$ ps -ef|grep pmon oracle 3953 1 0 Dec22 ? 00:00:00 asm_pmon_+ASM1 oracle 4976 1 0 Dec22 ? 00:00:00 ora_pmon_orcl11g1 oracle 23634 4901 0 00:55 pts/0 00:00:00 grep pmon [oracle@racpb1 ~]$ srvctl stop database -d orcl11g [oracle@racpb1 ~]$ srvctl status database -d orcl11g Instance orcl11g1 is not running on node racpb1 Instance orcl11g2 is not running on node racpb2

Take GRID_HOME backup on both nodes :

[oracle@racpb1 ~]$ grid [oracle@racpb1 ~]$ tar -cvf grid_home_11g.tar $GRID_HOME

Check Clusterware services status before upgrade :

[oracle@racpb1 ~]$ crsctl check cluster -all ************************************************************** racpb1: CRS-4537: Cluster Ready Services is online CRS-4529: Cluster Synchronization Services is online CRS-4533: Event Manager is online ************************************************************** racpb2: CRS-4537: Cluster Ready Services is online CRS-4529: Cluster Synchronization Services is online CRS-4533: Event Manager is online **************************************************************

Start the 12cR1 upgrade by executing runInstaller :

[oracle@racpb1 ~]$ cd /u01/ [oracle@racpb1 u01]$ cd grid/ [oracle@racpb1 grid]$ ./runInstaller Starting Oracle Universal Installer... Checking Temp space: must be greater than 415 MB. Actual 8565 MB Passed Checking swap space: must be greater than 150 MB. Actual 5996 MB Passed Checking monitor: must be configured to display at least 256 colors. Actual 16777216 Passed Preparing to launch Oracle Universal Installer from /tmp/OraInstall2018-12-23_01

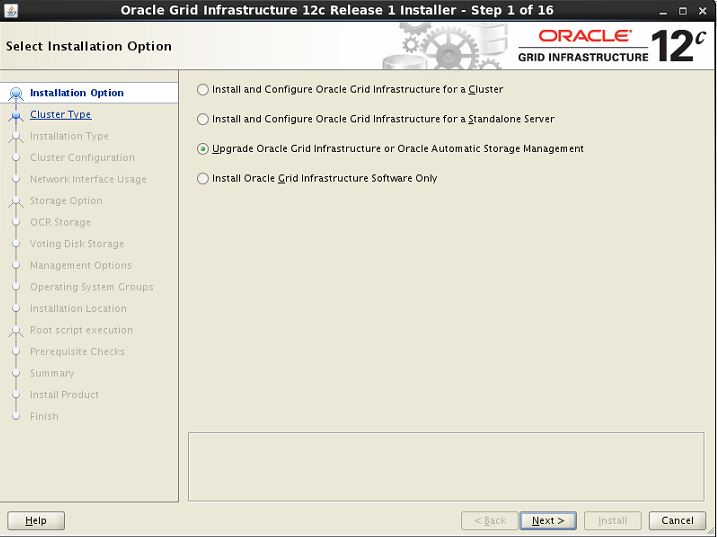

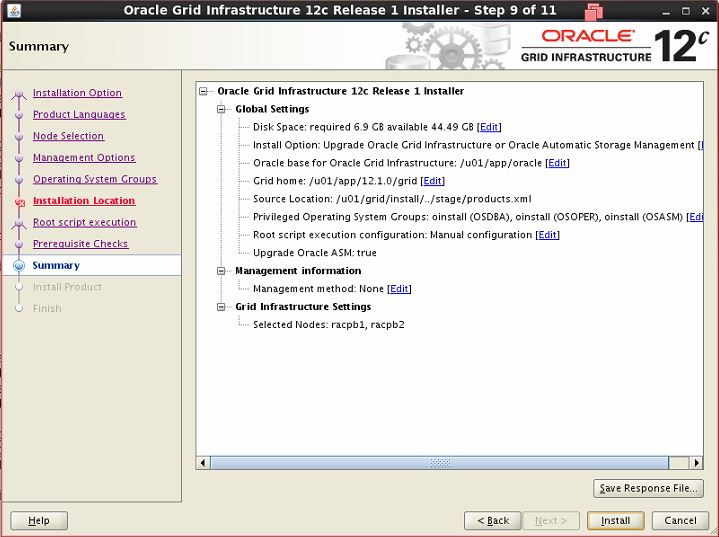

Select Upgrade option to upgrade GRID 12c infrastructure and ASM.

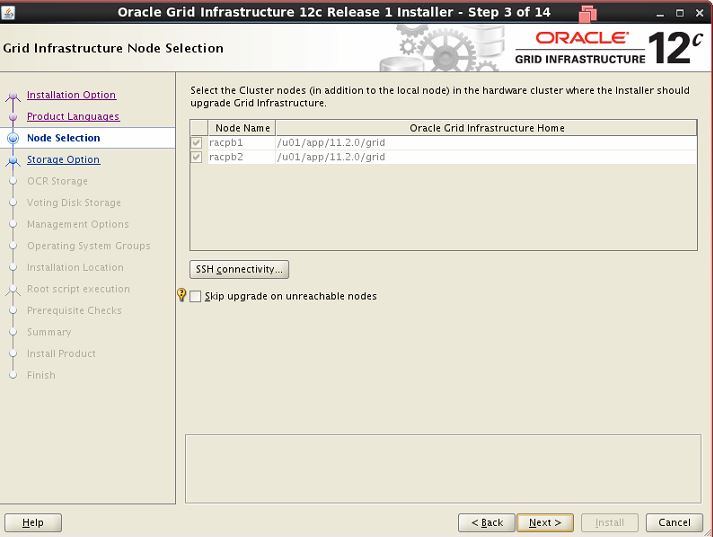

Check the public host names and existing GRID_HOME

Uncheck the EM cloud control option to disable EM.

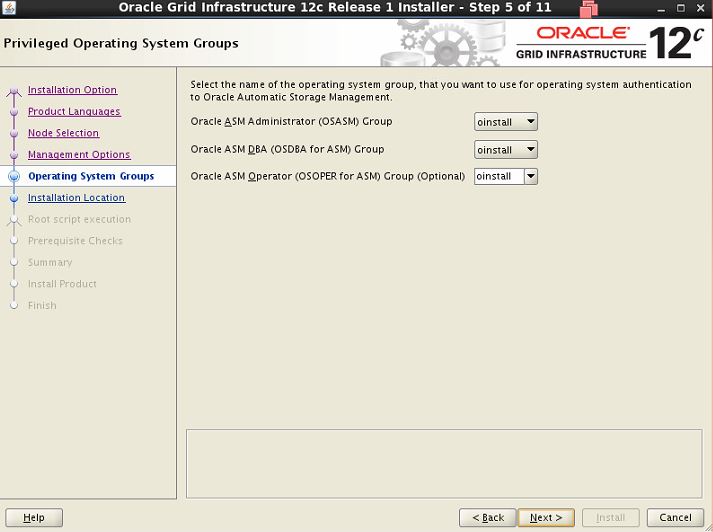

Specify location for ORACLE_BASE and ORACLE_HOME for 12c.

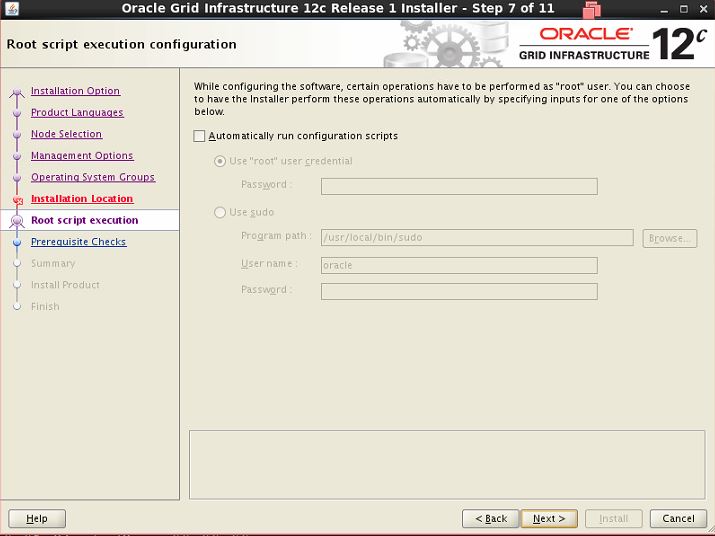

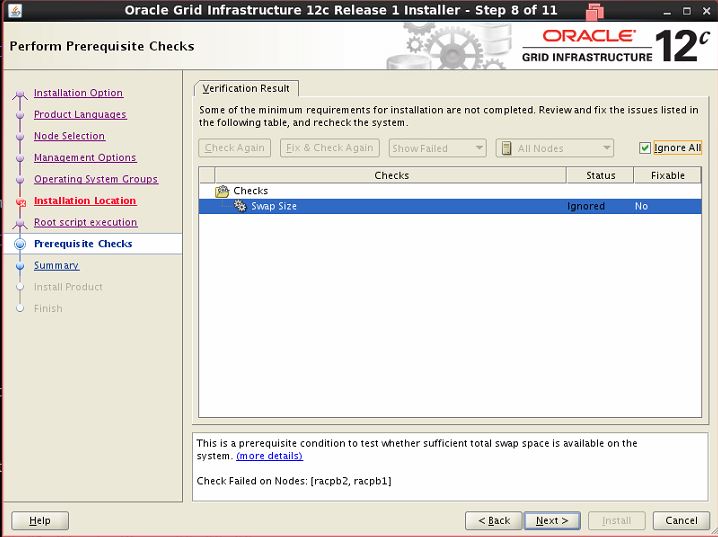

Ignore the SWAP SIZE it has to be twice the size of memory in server.

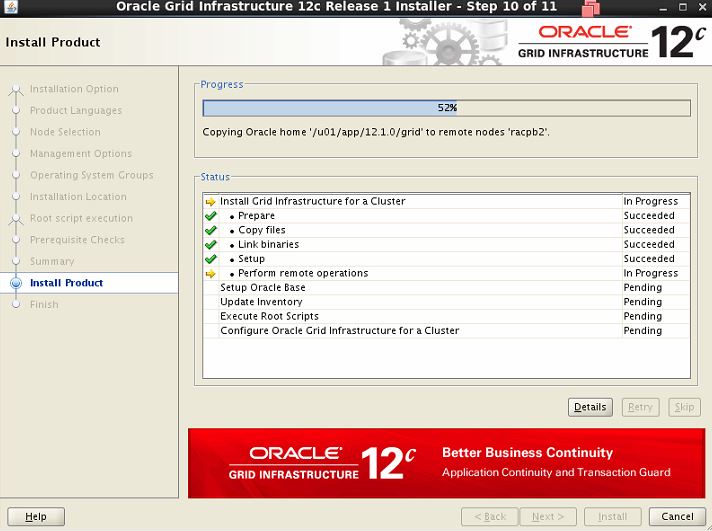

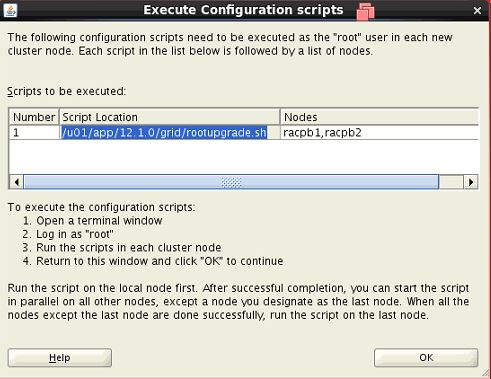

Execute rootupgrade.sh script in both nodes :

First node (racpb1) :-

[root@racpb1 bin]# sh /u01/app/12.1.0/grid/rootupgrade.sh Performing root user operation. The following environment variables are set as: ORACLE_OWNER= oracle ORACLE_HOME= /u01/app/12.1.0/grid Enter the full pathname of the local bin directory: [/usr/local/bin]: The contents of "dbhome" have not changed. No need to overwrite. The contents of "oraenv" have not changed. No need to overwrite. The contents of "coraenv" have not changed. No need to overwrite. Entries will be added to the /etc/oratab file as needed by Database Configuration Assistant when a database is created Finished running generic part of root script. Now product-specific root actions will be performed. Using configuration parameter file: /u01/app/12.1.0/grid/crs/install/crsconfig_params 2018/12/23 12:18:59 CLSRSC-4015: Performing install or upgrade action for Oracle Trace File Analyzer (TFA) Collector. 2018/12/23 12:18:59 CLSRSC-4012: Shutting down Oracle Trace File Analyzer (TFA) Collector. 2018/12/23 12:19:08 CLSRSC-4013: Successfully shut down Oracle Trace File Analyzer (TFA) Collector. 2018/12/23 12:19:19 CLSRSC-4003: Successfully patched Oracle Trace File Analyzer (TFA) Collector. 2018/12/23 12:19:22 CLSRSC-464: Starting retrieval of the cluster configuration data 2018/12/23 12:19:30 CLSRSC-465: Retrieval of the cluster configuration data has successfully completed. 2018/12/23 12:19:30 CLSRSC-363: User ignored prerequisites during installation 2018/12/23 12:19:38 CLSRSC-468: Setting Oracle Clusterware and ASM to rolling migration mode 2018/12/23 12:19:38 CLSRSC-482: Running command: '/u01/app/12.1.0/grid/bin/asmca -silent -upgradeNodeASM -nonRolling false -oldCRSHome /u01/app/11.2.0/grid -oldCRSVersion 11.2.0.4.0 -nodeNumber 1 -firstNode true -startRolling true' ASM configuration upgraded in local node successfully. 2018/12/23 12:19:45 CLSRSC-469: Successfully set Oracle Clusterware and ASM to rolling migration mode 2018/12/23 12:19:45 CLSRSC-466: Starting shutdown of the current Oracle Grid Infrastructure stack 2018/12/23 12:20:36 CLSRSC-467: Shutdown of the current Oracle Grid Infrastructure stack has successfully completed. OLR initialization - successful 2018/12/23 12:24:43 CLSRSC-329: Replacing Clusterware entries in file 'oracle-ohasd.conf' CRS-4133: Oracle High Availability Services has been stopped. CRS-4123: Oracle High Availability Services has been started. 2018/12/23 12:29:05 CLSRSC-472: Attempting to export the OCR 2018/12/23 12:29:06 CLSRSC-482: Running command: 'ocrconfig -upgrade oracle oinstall' 2018/12/23 12:29:23 CLSRSC-473: Successfully exported the OCR 2018/12/23 12:29:29 CLSRSC-486: At this stage of upgrade, the OCR has changed. Any attempt to downgrade the cluster after this point will require a complete cluster outage to restore the OCR. 2018/12/23 12:29:29 CLSRSC-541: To downgrade the cluster: 1. All nodes that have been upgraded must be downgraded. 2018/12/23 12:29:30 CLSRSC-542: 2. Before downgrading the last node, the Grid Infrastructure stack on all other cluster nodes must be down. 2018/12/23 12:29:30 CLSRSC-543: 3. The downgrade command must be run on the node racpb1 with the '-lastnode' option to restore global configuration data. 2018/12/23 12:29:55 CLSRSC-343: Successfully started Oracle Clusterware stack clscfg: EXISTING configuration version 5 detected. clscfg: version 5 is 11g Release 2. Successfully taken the backup of node specific configuration in OCR. Successfully accumulated necessary OCR keys. Creating OCR keys for user 'root', privgrp 'root'.. Operation successful. 2018/12/23 12:30:19 CLSRSC-474: Initiating upgrade of resource types 2018/12/23 12:31:12 CLSRSC-482: Running command: 'upgrade model -s 11.2.0.4.0 -d 12.1.0.2.0 -p first' 2018/12/23 12:31:12 CLSRSC-475: Upgrade of resource types successfully initiated. 2018/12/23 12:31:21 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeeded

Second node (racpb2) :-

[root@racpb2 ~]# sh /u01/app/12.1.0/grid/rootupgrade.sh Performing root user operation. The following environment variables are set as: ORACLE_OWNER= oracle ORACLE_HOME= /u01/app/12.1.0/grid Enter the full pathname of the local bin directory: [/usr/local/bin]: The file "dbhome" already exists in /usr/local/bin. Overwrite it? (y/n) [n]: y Copying dbhome to /usr/local/bin ... The file "oraenv" already exists in /usr/local/bin. Overwrite it? (y/n) [n]: y Copying oraenv to /usr/local/bin ... The file "coraenv" already exists in /usr/local/bin. Overwrite it? (y/n) [n]: y Copying coraenv to /usr/local/bin ... Entries will be added to the /etc/oratab file as needed by Database Configuration Assistant when a database is created Finished running generic part of root script. Now product-specific root actions will be performed. Using configuration parameter file: /u01/app/12.1.0/grid/crs/install/crsconfig_params 2018/12/23 12:34:35 CLSRSC-4015: Performing install or upgrade action for Oracle Trace File Analyzer (TFA) Collector. 2018/12/23 12:35:15 CLSRSC-4003: Successfully patched Oracle Trace File Analyzer (TFA) Collector. 2018/12/23 12:35:17 CLSRSC-464: Starting retrieval of the cluster configuration data 2018/12/23 12:35:24 CLSRSC-465: Retrieval of the cluster configuration data has successfully completed. 2018/12/23 12:35:24 CLSRSC-363: User ignored prerequisites during installation ASM configuration upgraded in local node successfully. 2018/12/23 12:35:41 CLSRSC-466: Starting shutdown of the current Oracle Grid Infrastructure stack 2018/12/23 12:36:10 CLSRSC-467: Shutdown of the current Oracle Grid Infrastructure stack has successfully completed. OLR initialization - successful 2018/12/23 12:36:37 CLSRSC-329: Replacing Clusterware entries in file 'oracle-ohasd.conf' CRS-4133: Oracle High Availability Services has been stopped. CRS-4123: Oracle High Availability Services has been started. 2018/12/23 12:39:54 CLSRSC-343: Successfully started Oracle Clusterware stack clscfg: EXISTING configuration version 5 detected. clscfg: version 5 is 12c Release 1. Successfully taken the backup of node specific configuration in OCR. Successfully accumulated necessary OCR keys. Creating OCR keys for user 'root', privgrp 'root'.. Operation successful. Start upgrade invoked.. 2018/12/23 12:40:21 CLSRSC-478: Setting Oracle Clusterware active version on the last node to be upgraded 2018/12/23 12:40:21 CLSRSC-482: Running command: '/u01/app/12.1.0/grid/bin/crsctl set crs activeversion' Started to upgrade the Oracle Clusterware. This operation may take a few minutes. Started to upgrade the OCR. Started to upgrade the CSS. The CSS was successfully upgraded. Started to upgrade Oracle ASM. Started to upgrade the CRS. The CRS was successfully upgraded. Successfully upgraded the Oracle Clusterware. Oracle Clusterware operating version was successfully set to 12.1.0.2.0 2018/12/23 12:42:33 CLSRSC-479: Successfully set Oracle Clusterware active version 2018/12/23 12:42:39 CLSRSC-476: Finishing upgrade of resource types 2018/12/23 12:43:00 CLSRSC-482: Running command: 'upgrade model -s 11.2.0.4.0 -d 12.1.0.2.0 -p last' 2018/12/23 12:43:00 CLSRSC-477: Successfully completed upgrade of resource types 2018/12/23 12:43:34 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeeded

After running rootupgrade.sh script,Click OK button.

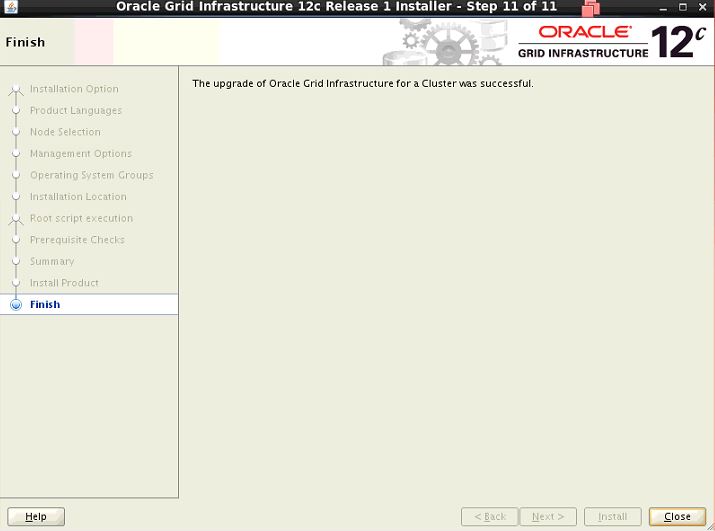

Check the Clusterware upgrade version:

[root@racpb1 ~]# cd /u01/app/12.1.0/grid/bin/ [root@racpb1 bin]# ./crsctl query crs activeversion Oracle Clusterware active version on the cluster is [12.1.0.2.0]

Note: If you are upgrading from 11.2.0.1/11.2.0.2/11.2.0.3 version to 12cR1 then you may need to apply additional patches before you proceed with upgrade.

Start the 11g database :

[oracle@racpb1 ~]$ srvctl start database -d orcl11g [oracle@racpb1 ~]$ srvctl status database -d orcl11g Instance orcl11g1 is running on node racpb1 Instance orcl11g2 is running on node racpb2

Upgrade RAC database from 11gR2 to 12cR1 :-

Backup the database before the upgrade :

Take level zero backup or cold backup of database.

Database upgrade Pre-check :

- Creating Stage for 12c database software.

[oracle@racpb1 ~]$ mkdir -p /u01/stage [oracle@racpb1 ~]$ chmod -R 755 /u01/stage/

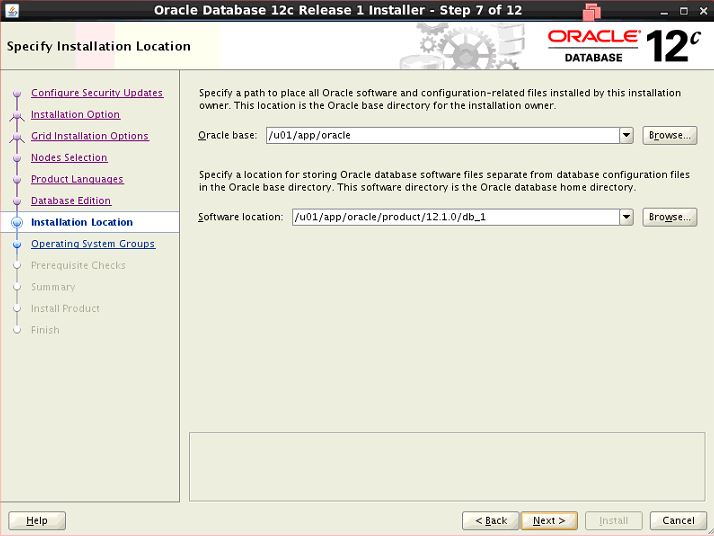

- Creating directory for 12c ORACLE_HOME.

[oracle@racpb1 ~]$ mkdir -p /u01/app/oracle/product/12.1.0/db_1 [oracle@racpb1 ~]$ chown -R oracle:oinstall /u01/app/oracle/product/12.1.0/db_1 [oracle@racpb1 ~]$ chmod -R 775 /u01/app/oracle/product/12.1.0/db_1

- Check the preupgrade status :

Run runcluvfy.sh from grid stage location :

[oracle@racpb1 grid]$ ./runcluvfy.sh stage -pre dbinst -upgrade -src_dbhome /u01/app/oracle/product/11.2.0/dbhome_1 -dest_dbhome /u01/app/oracle/product/12.1.0/db_1 -dest_version 12.1.0.2.0

Above command output has to be completed successfully to upgrade database from 11gR1 to 12cR1.

Unzip 12c database software in stage :

[oracle@racpb1 12102_64bit]$ unzip -d /u01/stage/ linuxamd64_12102_database_1of2.zip [oracle@racpb1 12102_64bit]$ unzip -d /u01/stage/ linuxamd64_12102_database_2of2.zip

Unset the 11g env. :

unset ORACLE_HOME unset ORACLE_BASE unset ORACLE_SID

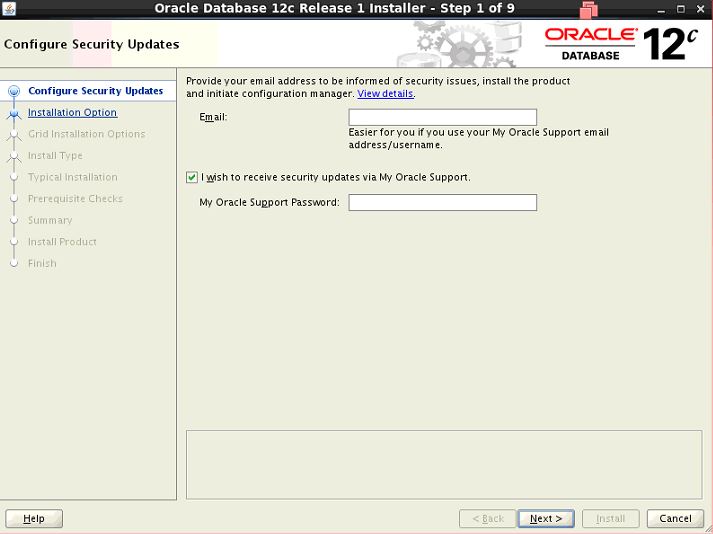

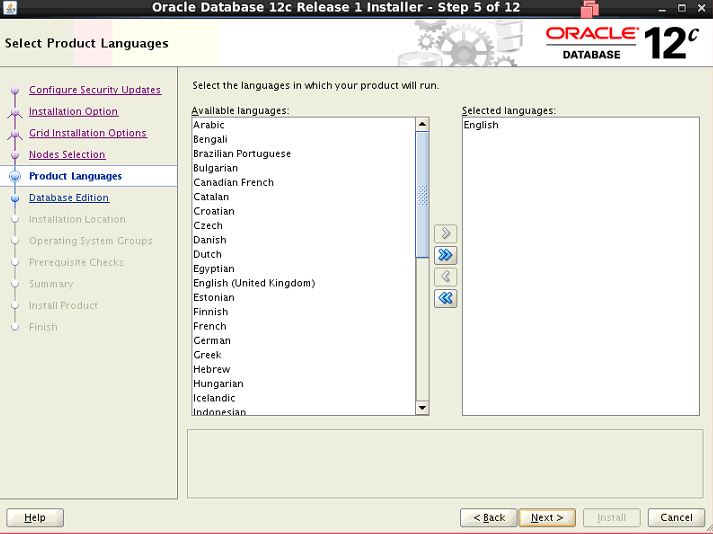

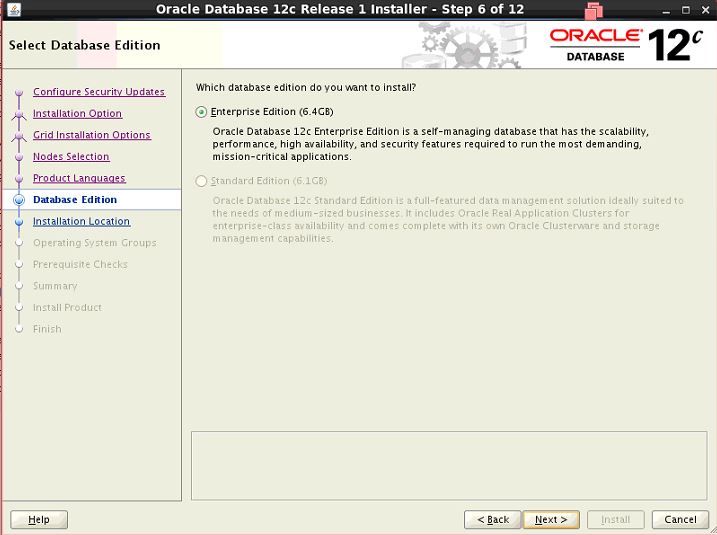

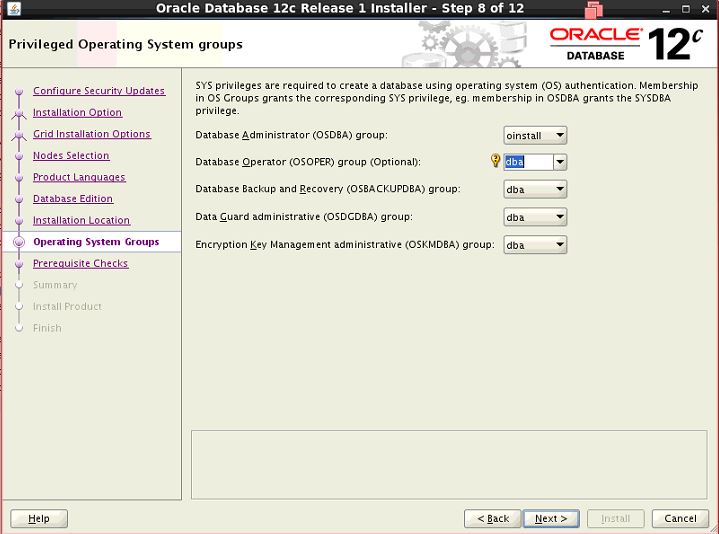

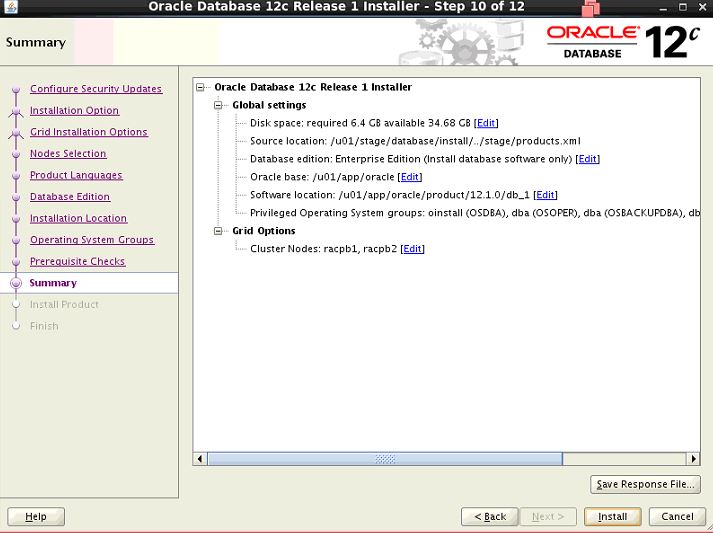

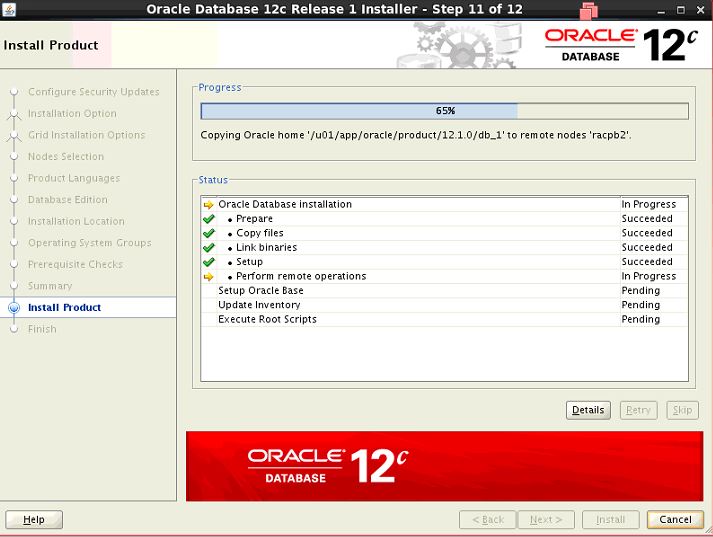

Install the 12.1.0.2 using the software only installation :

Set new 12c env. and Execute runInstaller.

[oracle@racpb1 ~]$ export ORACLE_HOME=/u01/app/oracle/product/12.1.0/db_1 [oracle@racpb1 ~]$ export ORACLE_BASE=/u01/app/oracle [oracle@racpb1 ~]$ export ORACLE_SID=orcl12c [oracle@racpb1 ~]$ [oracle@racpb1 ~]$ cd /u01/stage/database/ [oracle@racpb1 database]$ ./runInstaller Starting Oracle Universal Installer... Checking Temp space: must be greater than 500 MB. Actual 8533 MB Passed Checking swap space: must be greater than 150 MB. Actual 5999 MB Passed Checking monitor: must be configured to display at least 256 colors. Actual 16777216 Passed Preparing to launch Oracle Universal Installer from /tmp/OraInstall2018-12-23_02-05-54PM. Please wait ...

Skip the security updates from Oracle Support.

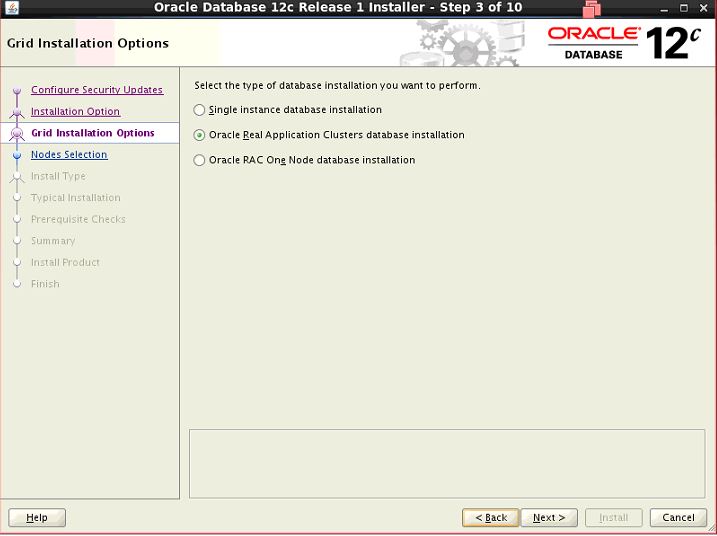

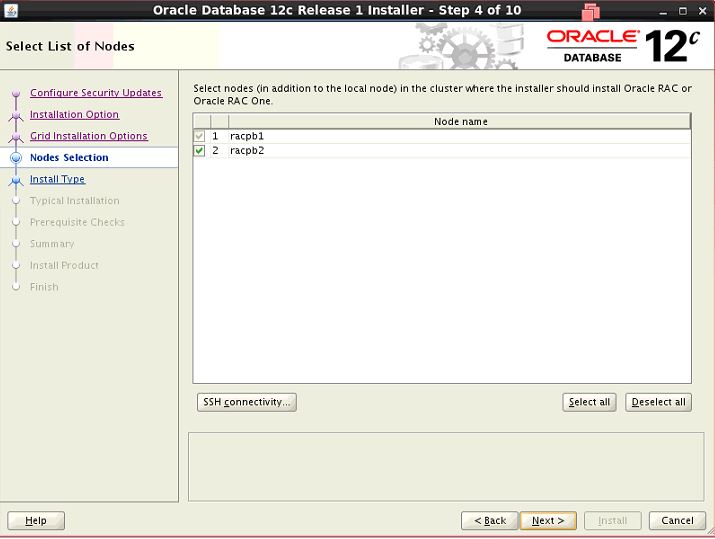

Select RAC database installation.

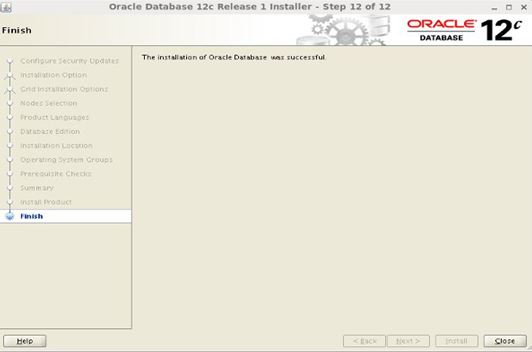

After database 12c software installation done run the below script from both nodes :

Run Preupgrade.sql script :

- Preupgrade script to identify any pre-reqs tasks that must be done on the database before the upgrade.

- Execute Preupgrade.sql script in 11.2.0.4 existing database from newly installed 12c ORACLE_HOME.

[oracle@racpb1 ~]$ . .bash_profile [oracle@racpb1 ~]$ 11g [oracle@racpb1 ~]$ cd /u01/app/oracle/product/12.1.0/db_1/rdbms/admin/ [oracle@racpb1 admin]$ sqlplus / as sysdba SQL*Plus: Release 11.2.0.4.0 Production on Mon Dec 24 03:35:26 2018 Copyright (c) 1982, 2013, Oracle. All rights reserved. Connected to: Oracle Database 11g Enterprise Edition Release 11.2.0.4.0 - 64bit Production With the Partitioning, Real Application Clusters, Automatic Storage Management, OLAP, Data Mining and Real Application Testing options SQL> @preupgrd.sql Loading Pre-Upgrade Package... *************************************************************************** Executing Pre-Upgrade Checks in ORCL11G... *************************************************************************************************************************************** ====>> ERRORS FOUND for ORCL11G <<==== The following are *** ERROR LEVEL CONDITIONS *** that must be addressed prior to attempting your upgrade. Failure to do so will result in a failed upgrade. You MUST resolve the above errors prior to upgrade ************************************************************************************************************************ ====>> PRE-UPGRADE RESULTS for ORCL11G <<==== ACTIONS REQUIRED: 1. Review results of the pre-upgrade checks: /u01/app/oracle/cfgtoollogs/orcl11g/preupgrade/preupgrade.log 2. Execute in the SOURCE environment BEFORE upgrade: /u01/app/oracle/cfgtoollogs/orcl11g/preupgrade/preupgrade_fixups.sql 3. Execute in the NEW environment AFTER upgrade: /u01/app/oracle/cfgtoollogs/orcl11g/preupgrade/postupgrade_fixups.sql *************************************************************************************************************************************** Pre-Upgrade Checks in ORCL11G Completed. ****************************************************************************************************************************************************** ***********************************************************************

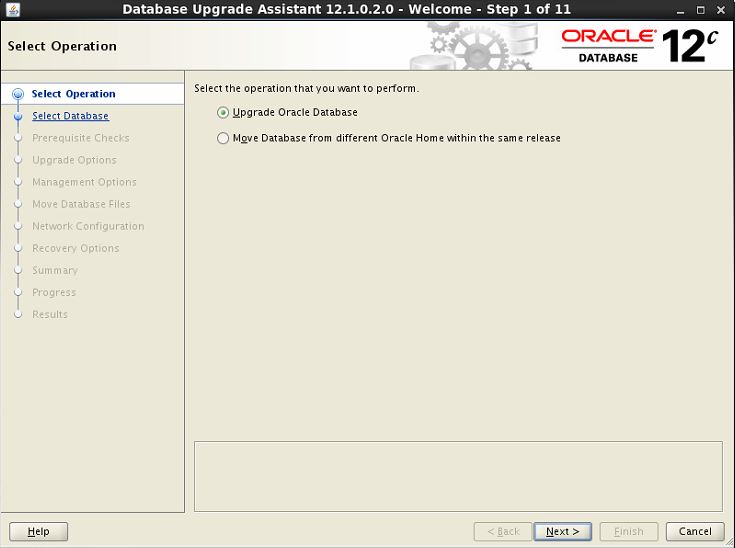

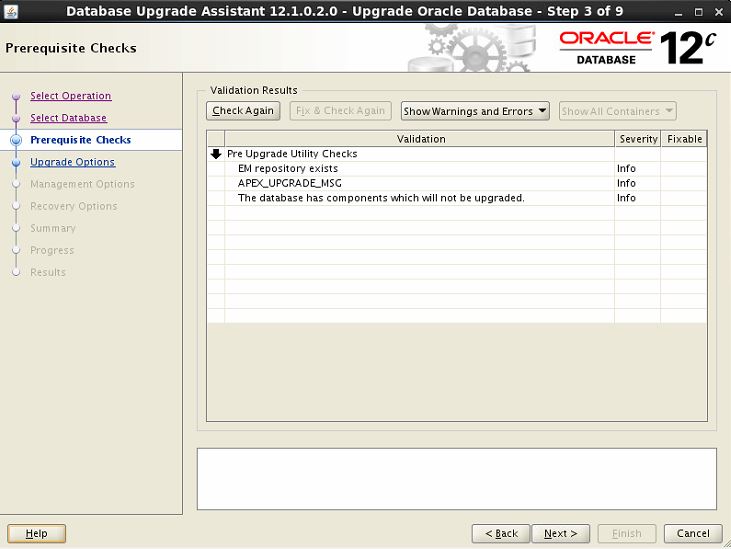

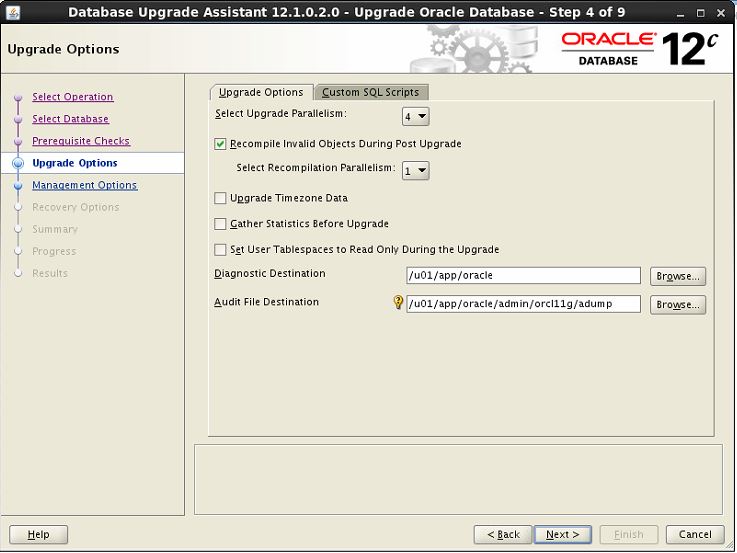

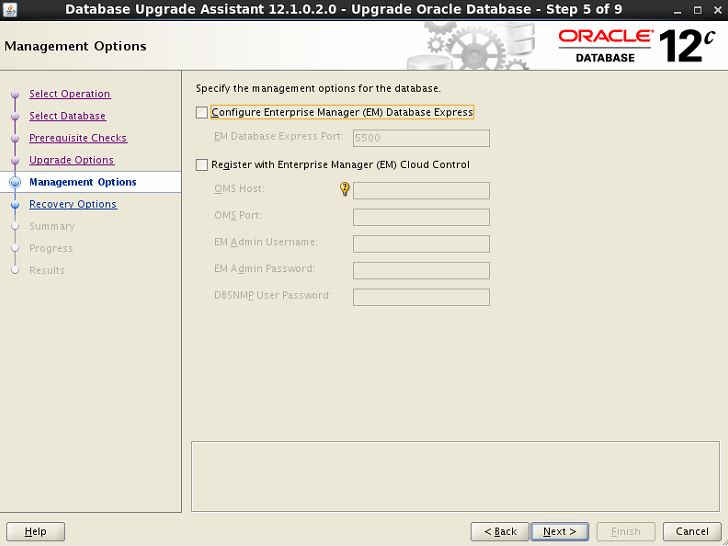

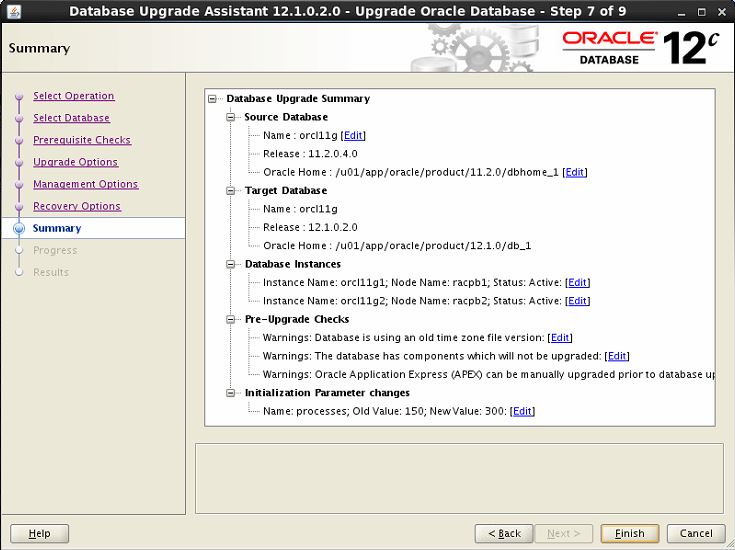

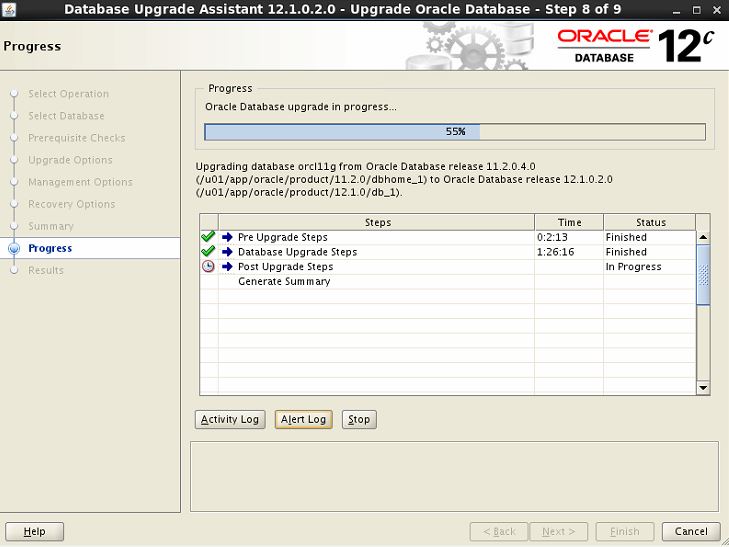

Run the DBUA to start the database upgrade :

Check Database version and configuration :-

[oracle@racpb1 ~]$ srvctl config database -d orcl11g Database unique name: orcl11g Database name: orcl11g Oracle home: /u01/app/oracle/product/12.1.0/db_1 Oracle user: oracle Spfile: +DATA/orcl11g/spfileorcl11g.ora Password file: Domain: localdomain.com Start options: open Stop options: immediate Database role: PRIMARY Management policy: AUTOMATIC Server pools: Disk Groups: DATA Mount point paths: Services: Type: RAC Start concurrency: Stop concurrency: OSDBA group: dba OSOPER group: oinstall Database instances: orcl11g1,orcl11g2 Configured nodes: racpb1,racpb2 Database is administrator managed [oracle@racpb1 ~]$ srvctl status database -d orcl11g Instance orcl11g1 is running on node racpb1 Instance orcl11g2 is running on node racpb2

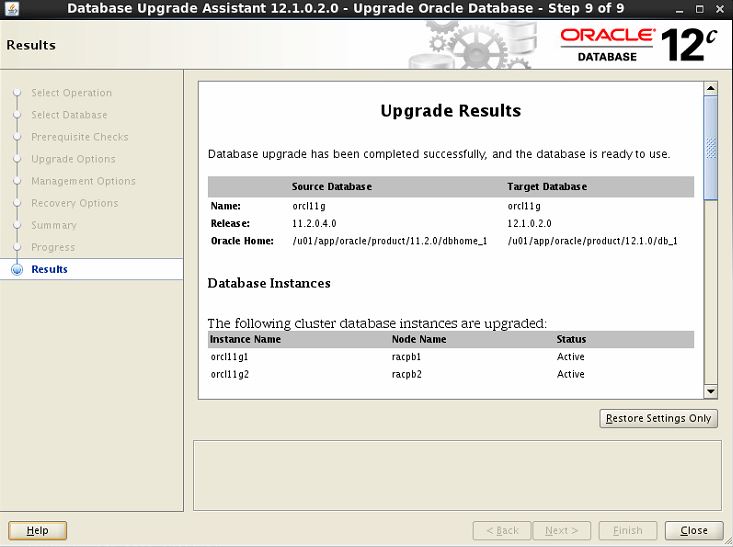

Successfully upgrade the Rac database From 11g to 12c (Grid & DB).

Catch Me On:- Hariprasath Rajaram Telegram:https://t.me/joinchat/I_f4DhGF_Zifr9YZvvMkRg LinkedIn:https://www.linkedin.com/in/hari-prasath-aa65bb19/ Facebook:https://www.facebook.com/HariPrasathdba FB Group:https://www.facebook.com/groups/894402327369506/ FB Page: https://www.facebook.com/dbahariprasath/? Twitter: https://twitter.com/hariprasathdba